Scour the Internet

2025

The first and most crucial part of this project is the collecting of good data. The importance of well documented, categorized, cleaned and meaningful data is the foundation of this entire project.

Identify Sources

Theoretical

When I started this project, I thought there might be a dozen or so military antiques websites on the internet. Militaria collecting—focused on military antiques like medals, uniforms, and flags—felt like a niche hobby, so I assumed there wouldn’t be too many sites catering to it.

I was pleasantly surprised to discover a vibrant and diverse community of collectors and dealers. There were sites for general military antiques and specialized ones for collectors of flags, medals, field gear, and even cookware. Many of these sites were small, run by a handful of people—or sometimes even just one person—which reflected in their varying quality of product information. These gaps in the data presented unique challenges, requiring creative solutions to fill them, which I’ll cover later.

Practical

Looking at the landscape of available data, I faced some realities and limitations. Platforms like eBay, Amazon, Craigslist, and Facebook were tempting because of their sheer scale but proved impractical. They were either too complex to scrape, too diluted with irrelevant data, or fraught with legal and ethical complications.

Instead, I focused on standalone militaria stores—sites I had personal experience with, had vetted myself, or came recommended by trusted sources. This focus narrowed my list to about 100 websites, each catering to a specific segment of the hobby, from mom-and-pop shops to niche specialists.

A photo I took of my favorite store, until it shut down a few years ago.

Build the Scraper

Theoretical

Building the scraper was by far the longest and most challenging aspect of this project. Over the course of three major restructures, I spent over a thousand hours refining the process. Initially, the goal was straightforward: collect the main product data points—title, description, price, URL, and availability.

However, as I learned better practices and refactored the program repeatedly, the scraper evolved significantly. I reduced server requests to both my infrastructure and the militaria sites, optimized the scraper’s logic for efficiency, and drastically improved data accuracy. Each iteration brought the project closer to being an intuitive, streamlined system.

Practical

I committed to around 50 hours a week of focused learning and development over several months, refining and improving the scraper whenever possible. Today, the program is a streamlined, step-by-step process that’s both efficient and reliable.

The basic layout of the scraper is as follows:

Access the Products Page: The scraper navigates to the target site’s product page, identifying key elements needed for the next steps.

Compile Product Lists: It gathers a comprehensive list of products on that specific page, including all essential metadata.

Compare with Existing Data: The product URLs are compared against those already in my database to identify discrepancies or new additions.

Process Discrepancies: If discrepancies in data points are detected—such as changes in availability or price—further processing is triggered.

Database Updates: New products are added to the database, and any detected changes are logged and updated efficiently.

Logic flow of my web scraping program.

Handle Dynamic Challenges

Theoretical

Initially, I tried hosting the database and program on my Raspberry Pi, but it quickly became clear this wasn’t feasible. The Raspberry Pi lacked the stability and storage needed for a project of this scale. Switching to Amazon Web Services solved these issues, providing consistent processing power and scalable storage.

Another major overhaul was moving away from using eval() for executing site-specific scraping logic. While it worked initially, it was unreliable, insecure, and prone to errors. Instead, I implemented JSON configuration files for each site. These files now store site-specific details, which the program retrieves, interprets, and executes safely.

Tracking historical changes was another challenge. Originally, any changes to a product’s data—like price or description—would overwrite the old information. This led to the loss of valuable insights. I revamped the database to include columns for historical data, allowing me to preserve and analyze changes over time.

Practical

Dynamic Websites: Many sites relied heavily on JavaScript, requiring Selenium for interaction. Handling these layouts added complexity but was essential for modern sites.

Site-Specific Configurations: I used JSON profiles to account for each site’s unique structure, significantly improving flexibility and maintainability.

Smarter Availability Checks: Instead of brute-forcing URLs, the scraper now checks the first few pages of each site for new products. For older products, it uses a process of elimination to mark them as unavailable without needing to visit every URL.

Old Configuration Format

Title: "title_element": "productSoup.find('h1', class_='product_title entry-title').text",

Description: "desc_element": "productSoup.find('div',class_='woocommerce-Tabs-panel woocommerce-Tabs-panel--description panel entry-content wc-tab').text",

Price: "price_element": "productSoup.find('span',class_='woocommerce-Price-amount amount').text",

Availability: "available_element": "True if productSoup.find('p', class_='stock in-stock') else False",

New Configuration Format

Title for Product Tile:

"tile_title": {

"method": "find",

"args": ["h2"],

"kwargs": { "class_": "woocommerce-loop-product__title" },

"extract": "text",

"post_process": { "type": "strip" }

},

Title for Product Details Page

"details_title": {

"method": "find",

"args": ["h1"],

"kwargs": { "class_": "product_title entry-title" },

"extract": "text",

"post_process": { "type": "strip" }

},

Improve the Data with AI and Machine Learning

Part 1: AI-Driven Classification Using OpenAI

Improve the Data

Initially, the data collected was minimal: URL, title, description, price, availability, and date. As the project evolved, so did the database. By the final overhaul, it included more comprehensive fields like site, product ID, date sold, OpenAI-generated classifications (conflict, nation, item type), extracted IDs, currency, grade, price history, and various image URLs.

One major challenge was inconsistency in categorization. While a few sites provided helpful categories, most lacked any structure. To address this, I manually labeled batches of products based on patterns in their names—e.g., "Iron Cross" typically relates to Germany and WWII. This foundational dataset, combined with the few site categorized data, became the basis for automated categorization.

The Role of OpenAI

Using the OpenAI API, I scaled up the categorization process. By feeding product descriptions and other metadata into OpenAI, it predicted classifications like nation of origin, conflict period, and item type. This transformation converted the database from a large collection of unstructured raw data into a structured and orderly resource.

For example:

"US Visor Cap" was classified as:

Nation:

"USA"Conflict:

"WWII"Item Type:

"HEADGEAR"

OpenAI’s capabilities allowed for an exponentially faster labelling of data than if I had done it manually.

Impact of Automation

Initial Dataset: ~10,000 manually/site-categorized data points.

Post-OpenAI API: Expanded to ~150,000 AI-classified entries.

Part 2: Machine Learning Classification

Objective

Once the OpenAI API provided a substantial set of categorized data (~150,000 entries), the next step was to use this data as a training set for my machine learning program. The goal was to build a model capable of handling future classifications without relying on the OpenAI API (which can get expensive).

Building the Machine Learning Model

Data Preparation:

Used the OpenAI categorized dataset to extract titles, descriptions, and metadata as features.

Target labels included nation, conflict, and item type classifications.

Preprocessed the text data by:

Removing punctuation.

Lowercasing the text.

Tokenizing and vectorizing the features (e.g., TF-IDF).

Model Training:

Experimented with several models, including:

Logistic Regression for simplicity and interpretability.

Random Forests for robust, non-linear patterns.

Support Vector Machines (SVM) for high-dimensional text data.

Split the data into training and testing sets to evaluate performance.

Results and Insights:

Models achieved high accuracy due to the consistent and well-structured OpenAI classifications.

Example output for

"US Visor Cap":Predicted Nation:

"USA"Predicted Conflict:

"WWII"Predicted Item Type:

"Headgear"

Advantages of Local Machine Learning

Cost Efficiency: Reduced reliance on the OpenAI API for future classifications.

Scalability: Enabled local processing of large datasets without additional API calls.

Customization: Allowed fine-tuning of the model based on specific project needs.

Graphs to the Right

The two graphs on the right illustrate how well the nation classification performed, though the same process was applied to all three classifications (nation, conflict, and item type).

The upper graph represents how accurately the model predicted each country. In an ideal scenario, the diagonal line would approach 100% from one corner to the other, indicating near-perfect accuracy. However, the results show some variability. This is likely due to countries with overlapping historical or militaristic ties causing confusion for the model. For example, Ireland and the United Kingdom share similarities in many WWII contexts, and China and the United States often overlap due to the U.S. supplying substantial military aid and expertise to China during WWII.

The lower graph explores the relationship between the number of rows of data each country had and the model's accuracy at classifying that country. Unsurprisingly, countries with larger datasets tend to have higher accuracy since the model has more examples to learn from. On the other hand, countries with limited data points, or those with inherently ambiguous connections to other nations, resulted in lower accuracy.

By examining these graphs, it's clear that while the model performed well overall, there’s room for improvement, particularly in cases where historical or contextual overlaps between countries exist.

Above is a confusion matrix to show how well my model performed at labelling title/descriptions with the appropriate nation.

Above shows the relation between how many points of data I have for a given nation and the accuracy. As can be seen, more data, more accurate.

Keep Data Fresh

Theoretical

Originally, the scraper sent parallel requests using ThreadPoolExecutor and semaphores, which worked but had significant downsides:

Cost: Constant requests drove up AWS expenses.

Scalability: The program couldn’t handle increasing data efficiently.

Site Load: The sheer number of requests placed unnecessary strain on the websites.

I overhauled the scraper to:

Start at the first page of each site and only proceed if new products are detected.

Use a process of elimination to mark products as unavailable without visiting every URL.

Scrape at consistent intervals throughout the day.

Practical

These changes reduced scraping times from hours to minutes, cut costs significantly, and made the program more scalable and considerate to the sites.

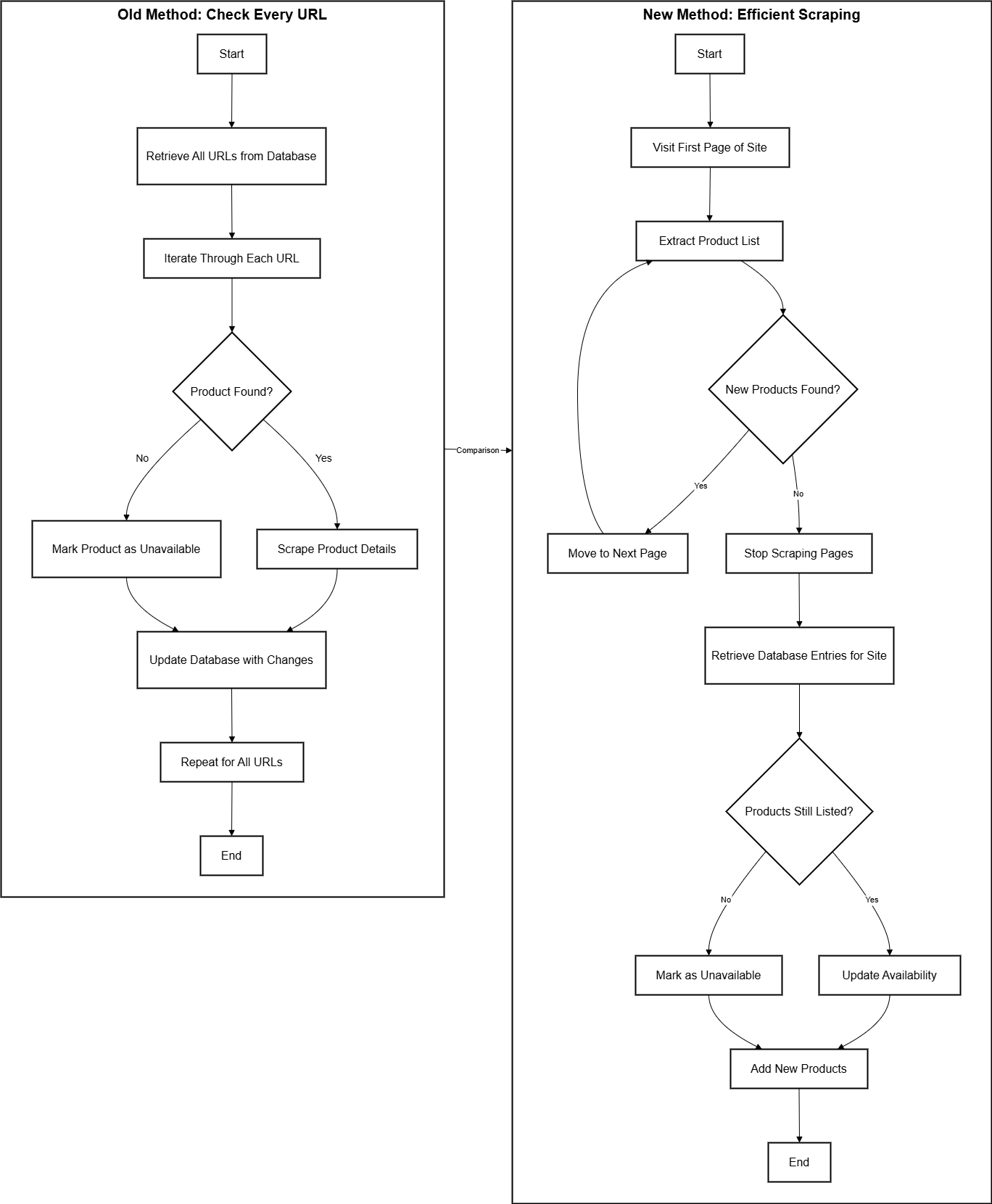

Old vs. New Scraping Methods: A Comparative Breakdown

The chart on the right illustrates the evolution of the scraping logic in this project.

Old Method:

The program iterated through every URL stored in the database, checking each product one by one.

This approach was exhaustive but inefficient, leading to increased costs, strain on external servers, and lengthy scraping times.

New Method:

The program now starts by scraping the first page of a site and only continues to additional pages if new products are detected.

Products not listed on the site are marked unavailable through a process of elimination, reducing unnecessary requests.

This approach is significantly faster, more scalable, and minimizes the load on both the infrastructure and the sites being scraped.

This transformation highlights the balance between precision and efficiency, ensuring up-to-date data with fewer resources and reduced external server strain.

Skills and Technologies

AI-Powered Data Enrichment

OpenAI GPT API: Used for intelligent classification of missing fields like nation, conflict, and item type.

Prompt Engineering: Designed prompts to ensure consistent and accurate classifications.

Cloud Technologies

AWS Services: EC2, RDS, S3

Managed infrastructure for scalable operations.

Configured secure communication between services using IAM roles and security groups.

Programming and Automation

Python Libraries: BeautifulSoup, Selenium, psycopg2, pandas, logging

Streamlined data handling, database integration, and logging for debugging.

Database Management

PostgreSQL:

Designed to store and analyze large datasets with minimal latency.

Optimized queries for data cleaning and batch updates.

Historical tracking of price and availability changes.

Version Control and Deployment

GitHub Integration:

Maintained version control and seamless updates.

Automated deployments via secure SSH.